This year, Decentralised AI (DAI) has captured the industry spotlight. The resignation of Stability AI CEO Emad Mostaque highlighted the centralisation problem. His departure reflects a growing consensus that AI development should fall under the stewardship of a diverse global community.

This followed a wave of generative AI controversies: deepfakes, the OpenAI boardroom fiasco, Gemini AI’s data bias, and the Getty Images lawsuit against Stability AI.

Having closed a $6 million seed round co-led by Lightspeed Faction and Tagus Capital, FLock is here to change. We are redirecting governance away from the tight grip of proprietary LLM providers and into the hands of the public.

In light of recent events, here we lay out our vision and products.

The problem with centralised control over AI creation

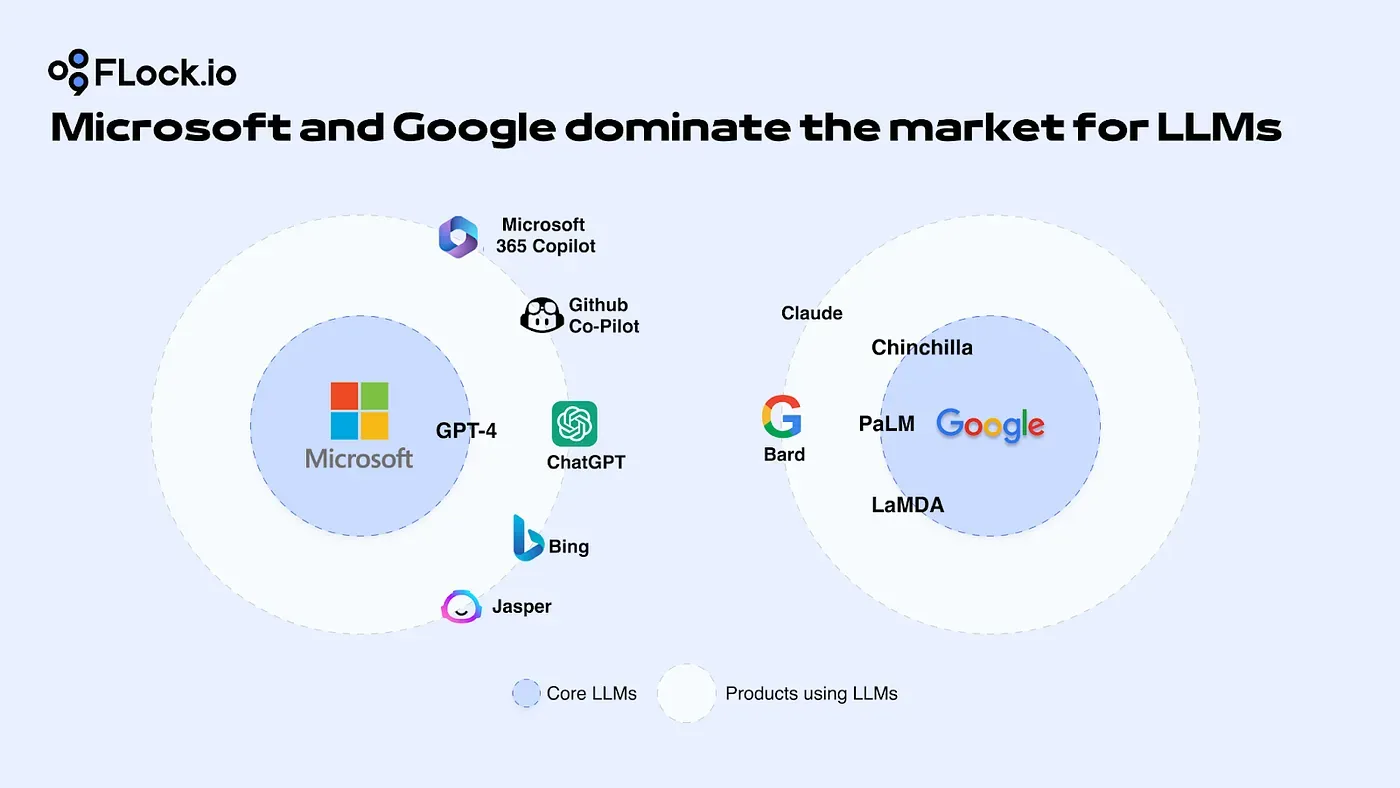

The centralisation problem presents an overbearing barrier to AI innovation. The world’s largest corporations hold sway over the trajectory of AI development based on their own objectives, not necessarily aligning with public interest.

The danger is that corporations’ values and biases are amplified on a global scale. They determine access to models, they lack transparency, and their value alignment often downgrades model performance.

We consequently see low public participation, less computing power, less and lower quality training data, data bias and inaccuracies, no rewards for data contributors, and a missed opportunity for AI to realise its maximal potential as a force for good.

FLock is democratising AI creation

FLock seeks to decentralise training and value alignment. We ensure that objectives match the public’s ethics and societal aims, decision-making falls to communities, and usefulness is prioritised.

FLock knocks down barriers impeding participation. We allow developers to provide models, data, or compute in a modular way. The result: a plethora of fit-for-purpose models created by, and for, communities.

Our incentivised platform democratises AI agent training, fine-tuning and inferencing. It halts user data collection and equitably distributes rewards.

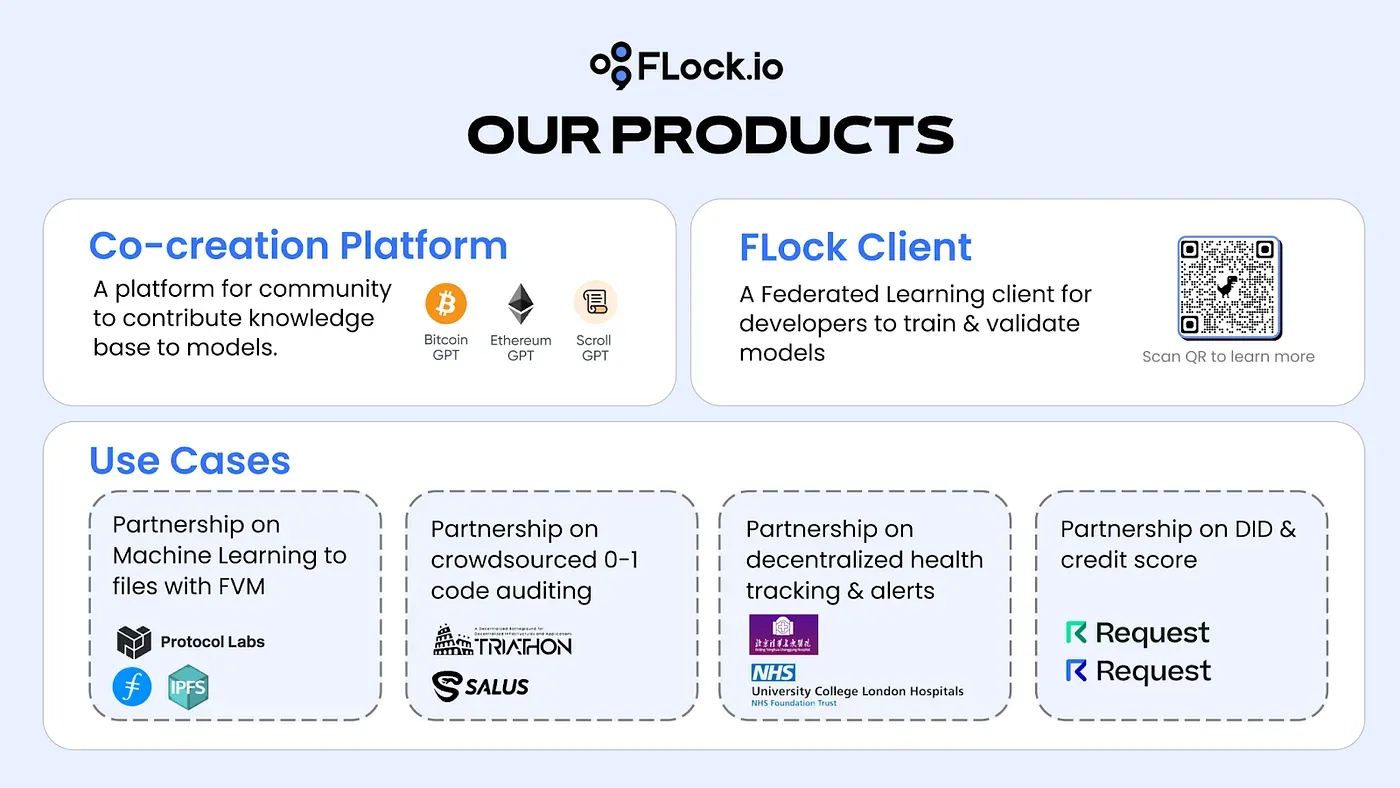

FLock’s three main product offerings

1.On-chain Federated Learning client keeps data local

In federated learning, training data remains decentralised on individual devices or servers, instead of sending it to a central server for potential misuse.

FLock’s technology won an award at the NeurIPS conference.

2. Incentivised training (testnet)

Training tasks are set by protocols and developers.

Developers compete in bounties to create models for different communities e.g. companion chatbots or crypto trading agents. They utilise FLock’s training scripts, FL client, or the RAG platform. Validators judge the models.

These models will be used by Web3 and Web2 communities; protocol revenue is shared with model co-creators.

3.RAG chatbots (part of AI co-creation platform beta)

FLock’s library ranges from intelligent agents to sophisticated trading and confluence bots. It enables external database integration, and RAG enhances domain-specific accuracy.

FLock invites you to develop domain-specific chatbots, enhance the agents’ knowledge bases by contributing their idle resources, interact to evaluate the models, and refine the data for quality assurance.

One use case is BTC-GPT, which has reached 10k model calls.

FLock’s part in the Web3 ecosystem

FLock is blockchain-enabled and positioned in the foundational infrastructure layer of the Web3 AI ecosystem.

Our model supports pre-training, and fine-tuning models used by Web3 trading and transaction agents, search, and AI companions. Our training scripts and model hosting will be compatible with decentralised cloud providers.

FLock supports various model structures, from complex neural networks to simpler statistical models. It can be plugged into any decentralised hosting network (e.g. io.net, Gensyn, Ritual) to improve model access, and to any Dapps to improve performance.